A new file format is making the rounds: LLMs.txt.

The pitch is simple: drop a /llms.txt file in the root of your site, list out your docs or key pages in Markdown, and voilà – Large Language Models will understand your site better when answering user questions.

Jeremy Howard (Answer.AI) proposed it. On November 14th, Mintlify jumped in, enabling thousands of dev docs to be “LLM-friendly” overnight. Anthropic, Cursor, Zapier, Hugging Face – they’ve all joined the parade.

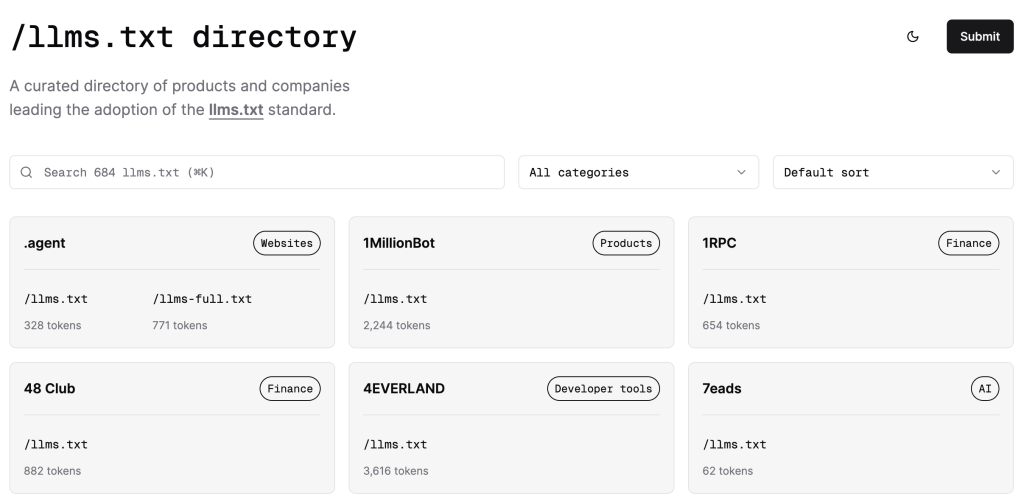

There’s even a directory: https://directory.llmstxt.cloud/.

And tools to generate it, validate it, expand it into model context.

So: Is this the next robots.txt, or just busy work for website owners?

The Promise

At its best, llms.txt is a map for answer engines.

Instead of letting ChatGPT or Perplexity guess their way through your site, you hand them a tidy digest:

- The most important links.

- Human-readable summaries.

- Clear Markdown chunks that LLMs actually parse.

In theory, this improves:

- Discovery (models find the right pages).

- Accuracy (answers cite your docs, not some scraped copy).

- Brand attribution (your domain shows up in generated responses).

For technical docs, API references, and changelogs, it’s a no-brainer. That’s why Mintlify, LangChain, and OpenPipe all embraced it.

The Reality Check

But here’s where it gets murky.

- Google’s John Mueller flat out said no consumer-facing LLM is fetching this file for training or grounding. A lot of people safely ignore John Mueller, as usually things happen opposite to what he says.

- No analytics: You can’t tell if models actually consume it.

- Maintenance pain: Every site update means resyncing another CMS-in-a-file.

- Terrible UX: If an LLM cites your raw

.md, users land on an ugly dump, not your branded site. (there’s a wayout for this) - Zero guarantees: You’re formatting content for LLMs with no promise of traffic, attribution, or conversions.

That’s why some folks, like PandaHub, skipped the standard entirely. Instead, they built a clean /llm-info page: styled, branded, canonical, and tracked like any other landing page.

Guess what? That worked better.

Versions in the Wild

Popular formats that LLMs are currently fed with.

/llms.txt→ a sitemap-style index. Example/llms-full.txt→ the whole shebang in one file (context window might be tricky). Example- Per-URL

.mdfiles → one clean doc per page. Example /llm-infopages → human-readable, brand-controlled alternative. A PandaHub approach. One styled, trackable URL controlling the narrative. They saw better visibility in ChatGPT and Perplexity, with solid attribution. Perks I loved:- Single-page simplicity.

- Brand-aligned and SEO-friendly indexing.

- Keeps users on your site for conversions.

Zapier, Anthropic, Hugging Face? They’re working on it..

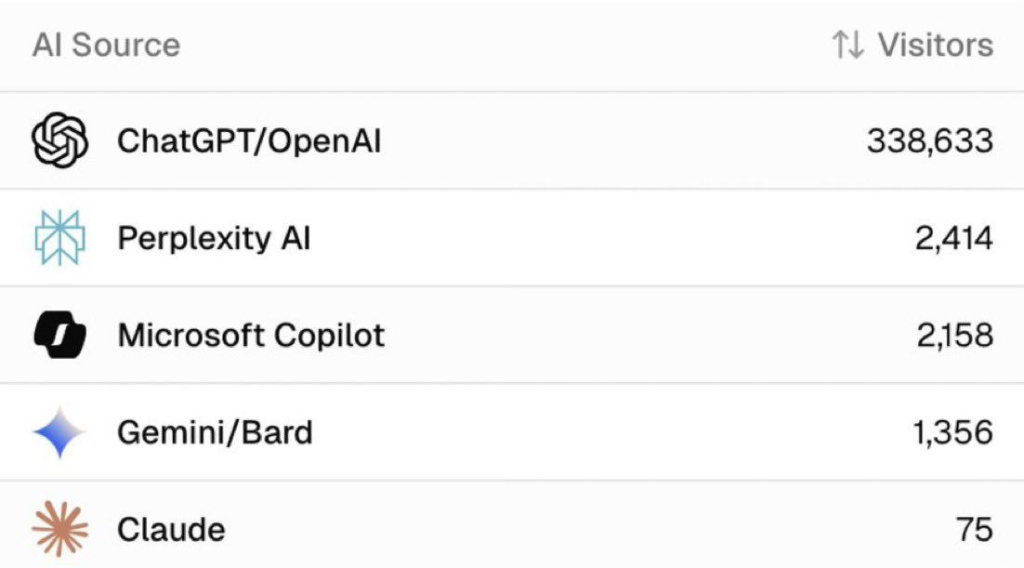

Perplexity? Actively consuming it.

Google? still not addressing it.

So – standard? Not there yet.

How It’s Structured

The spec is intentionally simple: a plain Markdown file, sitting at /llms.txt (or /llms-full.txt if you’re brave).

At a minimum, it includes:

- A title (H1) → usually the name of your project or site.

- A short summary (blockquote) → one-liner on what the site is about.

- Optional notes → details, quirks, compatibility, caveats.

- Sections (H2s) → grouping docs, guides, references.

- Links with descriptions → the real juice; each link is Markdown

[name](url): short description. - Some developers include: Timestamps, Version info, Token estimates for each file

Here’s a stripped-down example:

# FastHTML

> FastHTML is a Python library combining Starlette, Uvicorn, HTMX, and fastcore.

Important notes:- Not compatible with FastAPI.- Works with JS-native web components, not React/Vue/Svelte.

## Docs

- [Quick start](https://fastht.ml/docs/tutorials/quickstart_for_web_devs.html.md): Overview of features- [HTMX reference](https://github.com/bigskysoftware/htmx/blob/master/www/content/reference.md): Attributes, classes, headers, events

## Examples

- [Todo list app](https://github.com/AnswerDotAI/fasthtml/blob/main/examples/adv_app.py): CRUD demo with idiomatic patterns

That’s it. The whole point is: models parse Markdown better than cluttered HTML, so you hand them a digestible map of your site.

Implementation: How to Actually Do It

1. Decide your flavor

/llms.txt: index-style, light and link-based./llms-full.txt: inline all your docs (watch context window limits).- Per-URL

.md: one clean doc per resource. /llm-info: styled HTML alternative, friendlier for humans + AIs.

Preferred way: test all, as we don’t know what works the best yet.

2. Build the file

- Keep language concise.

- Use bullet points, headings, and short summaries.

- Link to canonical URLs, not random copies.

- Optional: add timestamps, version numbers, token estimates (handy for RAG pipelines).

3. Publish it

- Place it at the site root (

/llms.txt). - Make sure it’s publicly accessible.

- Validate it with tools like llmstxt.cloud.

4. Test it

- Paste into ChatGPT/Claude and ask: “What is X about?”

- See if the answers improve with your file in context.

- Adjust as needed.

5. Maintain it

- Helpful Hack or Hype? Update alongside your docs/marketing site.

- Keep it simple. If you’re rewriting whole docs just for llms.txt, you’re doing too much.

Don’ts

- Don’t overload it with 200+ links. LLMs will truncate.

- Don’t serve raw Markdown as the only entry point for humans.

- Don’t treat it as SEO. It’s not.

Ready to Explore?

Want your site to show up in AI answers, not just Google results?

Broader AI Visibility Tips That Complemented LLMs.txt

LLMs.txt isn’t a silver bullet-I paired it with timeless principles:

- Clarity Wins: Write naturally, like explaining to a colleague. Avoid ambiguity.

- Chunking: Break into headings, bullets-easy for skimming and parsing.

- E-E-A-T Signals: Cite sources, show expertise, build trust.

- Internal Linking: Creates a logical map for humans and AIs.

This targets “answer engines” beyond traditional SEO.

Advanced Use Cases I Tested & Guidelines

- E-commerce: Guide to products; block checkout paths.

- SaaS: Expose docs/blogs; hide dashboards.

- Agencies: Highlight cases; shield client reports.

- Clinics & Doctors: Showcase treatments, expertise, and conditions; hide patient data or booking portals.

My Take

Here’s the neutral-but-practical view:

- If you have dev docs or APIs:

Create a llms.txt. It’s low-lift, helps with RAG pipelines, and costs little. - If you care about brand, UX, and conversions:

Make a/llm-infopage instead. It’s easier to maintain, looks good, and doubles as a landing page for AI and humans. - If you’re expecting traffic from this:

This isn’t SEO 2.0. It’s not even robots.txt 2.0. Think of it as metadata for models – helpful, but not transformative. This does give results, but not the best place to rely, yet a no-brainer to try.

The Bigger Picture

In 2025, we’re all optimising for AI answers, not just Google search. Call it AEO (AI Engine Optimisation) or GEO (Generative Engine Optimisation) – the idea is the same:

- Write clear, chunked, attribution-friendly content.

- Keep it up-to-date.

- Build trust with sources, authorship, and E-E-A-T.

Whether you use /llms.txt or /llm-info, the real play is owning your narrative inside AI tools.

And that’s where this movement has value.

Not as a standard. Not yet.

But as a forcing function to ask: What do we want AI to know about our site? And how do we make it easy – for both the machines and the humans?

That’s the real optimisation.

Are you thinking about enhancing your AI Visibility?

Let’s make you LLM-visible.

LLMs already answer millions of questions daily about health, SaaS tools, agencies, and products.

If your content isn’t structured for them, your expertise might never surface in those answers.

That’s where we come in: we help founders, doctors, and teams create custom /llms.txt and /llm-info setups that boost your brand visibility inside ChatGPT, Claude, and Perplexity, not just Google.

We’ve built AI-visibility files for:

🏥 Medical professionals (clinic expertise recognition)

⚙️ SaaS teams (developer docs + changelogs)

🎨 Agencies (case study indexing without leaking client data)